This section is all about author's speculations about the future of data systems.

Martin Klepmann:

The goal of data integration is to make sure that data ends up in the right form in all the right places

The ability to create derived views of the source data allows for gradual evolution of the entire application. Data integration(batch and stream) play the major role in creating derived views. Lets discuss two architecture styles related to data integration

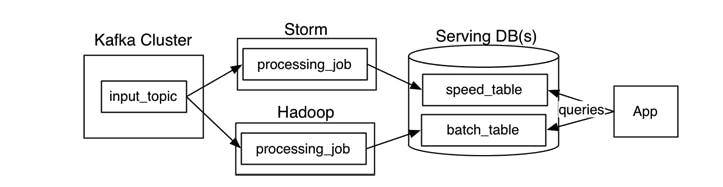

Lambda Architecture

Image Source: Oreilly

Image Source: Oreilly

- As in the above picture, there are two branches of integration

- Immutable event data is fed into both streaming and batch layer

- Transformation is done in both the streaming and batch

- View is derived from combination of streaming(recent events) and batch(historic events)

➕ Can reprocess data multiple times

➕ Batch workflows are bit easier to reason about

➖ Logic in two places

➖ Need to combine output of batch and stream

➖ Reprocessing entire history is time intensive. Hence batch needs to be incremental as well

How do we address the limitations of lambda architecture ? Can we unify the batch and streaming into a single component ? To achieve this, we need the following features

- The ability to replay historical events through the same processing engine that handles the stream of recent events

- Exactly-once semantics for stream processors

- Tools for windowing by event time, not by processing time

This led to a new architectural pattern: Kappa Architecture

The entire flow of data can be modelled as dataflow architecture pattern.

- Whenever a change to state happens in database, an event is emitted

- Stream processor processes the event and creates an derived view

- What if data is needed from two different systems? Both data has to be maintained in the local database of stream processor( in time series manner)

Dataflow architecture offers the following benefits

- Dataflow systems can maintain integrity guarantees on derived data without atomic commit, linearizability, or synchronous cross-partition coordination.

- Many applications are actually fine with eventual consistency as long as integrity is maintained

So far we have discussed systems where updates or writes are modeled as events. Read requests can also be represented as stream of events. Stream processor emits the result to output stream for the request. This is suitable in case of asynchronous communication.

Author ends the section with the message

Trust but Verify

Expanded, systems can fail and we must periodically check for integrity. Develop the concept of having audits for verification.

The final section is dedicated to ethics and in author's own words

Doing the right thing