In this post, I am going to present a system architecture which we(Krithika, Kaushik and myself) designed for a video platform loosely based on youtube and netflix. This post will cover our journey in designing the architecture and the final architecture we presented.

I will start with the background of why we even tried this venture. One of the ways, Our company Thoughtworks fosters learning and innovation is via communities of interest. One such community recently gaining momentum in our office is System Design community. As part of its activity, the community came with 3 problem statements and asked people to form teams to design an architecture for the same. Thus our Archwar began.

Problem Statement

ThoughtFlix is an OTT platform. Wished for a one-stop platform to watch all events in Thoughtworks? Help us build ThoughtFlix where TWers Login to view private events. ThoughtFlix should stream seamlessly with lesser latency. Give it a personal touch by showing events of interest and recommendations and ability to view a preview of the event when hovered over a video. How would you store these videos? Design an architecture to cater to these needs in an efficient way.

With this, our trio translated the above statement into a set of requirements which we wanted to base our architecture on. The below is a set of rough points which we wanted to use as basis for further ideation.

- Public vs private events

- Login to view private events

- Less latency in streaming

- Events of my interest, Recommendations

- Video storage - Object store

- Paid workshop videos(can be a paid feature) - Free for ThoughtWorkers

- Video preview when hovered over a video

Ideation

Excerpt from Fundamentals of Software Architecture

Software architecture consists of 4 parts

- structure of the system (such as microservices, layered, or microkernel).

- architecture characteristics (“-ilities”)

- architecture decisions form the constraints of the system

- design principles provide guidance for the preferred method (in this case, asynchronous messaging)

We took this as basis and started designing our architecture based on this approach.

Each architecture style is well supported for a set of characteristics. So our goal went onto identifying the key characteristics needed for our problem statement

Modularity

Do we see the system as a single cohesive one or there is need for logical partitions ?

We tried to answer this question and came up with the below set of bounded contexts

- Playback

- Personalization

- Payment

- Recommender

- Search

- Entitlement

- UserBehaviour Tracking

- Admin

- Auth

We used Mural and I highly recommend it for colloborative brainstorming.

We were debating on MVP. Do we need all the logical partitions to be built up front ? Can we just build a portion of it ? The writing on the wall was clear. Playback was essential to validate the product while rest of the modules can be built up later.

Thus modularity was a implicit and needed characteristic.

Evolutionary

Should we build the system incrementally and support constant change ?

This is in a way tied to the previous characteristic. Our thought process is to build the application slowly towards the target architecture in mind but with incremental progress and course correction. I will explain with an example.

We dont need an admin module for the MVP. All it matters is that the video is available to play. We can just have a webpage which list all the videos which are manually uploaded into an object storage. In this case, we would require only the following domains

- Playback

- Search (Primitive version to list all)

Availability

How long the system needs to be available ?

Not all modules of the system require same amount of availability. Based on the above MVP scenario, lets take Playback which requires to be available almost 24x7 with minor interruptions. Users can tolerate some interruptions but the reputation of the product is at stake if there are frequent unavaialability. This is an implicit requirement.

Scalability

Ability for the system to perform and operate as the number of users or requests increases.

Yes absolutely. Our customer vision was that this platform was going to scale globally in a much quicker pace. This is highlighted by the requirement one-stop platform to watch all events in Thoughtworks.

A close cousin is elasticity which is auto scaling on demand. Considering we chose AWS stack(three of us very familiar) this seemed to be straightforward with the right choices. But Elasticity is not a chief characteristic at MVP stage.

Performance

What is the response time our users can tolerate ?

This was a critical characteristic for the Playback Service. Less latency in streaming explicitly calls out the need for this characteristic.

Authentication & Authorization

This is self explanatory and this was an explicit requirement : TWers Login to view private events.

Fault Tolerance

Can the system operate even if parts of it fail ?

This was not explicitly mentioned in the requirement. This is a good characteristic which ensures that Playback can continue even in case of transient failures in other parts of the system.

Our Choice

We used the above characteristics to compare the different architecture styles. All of this meant microservices architecture was a good fit for our use case.

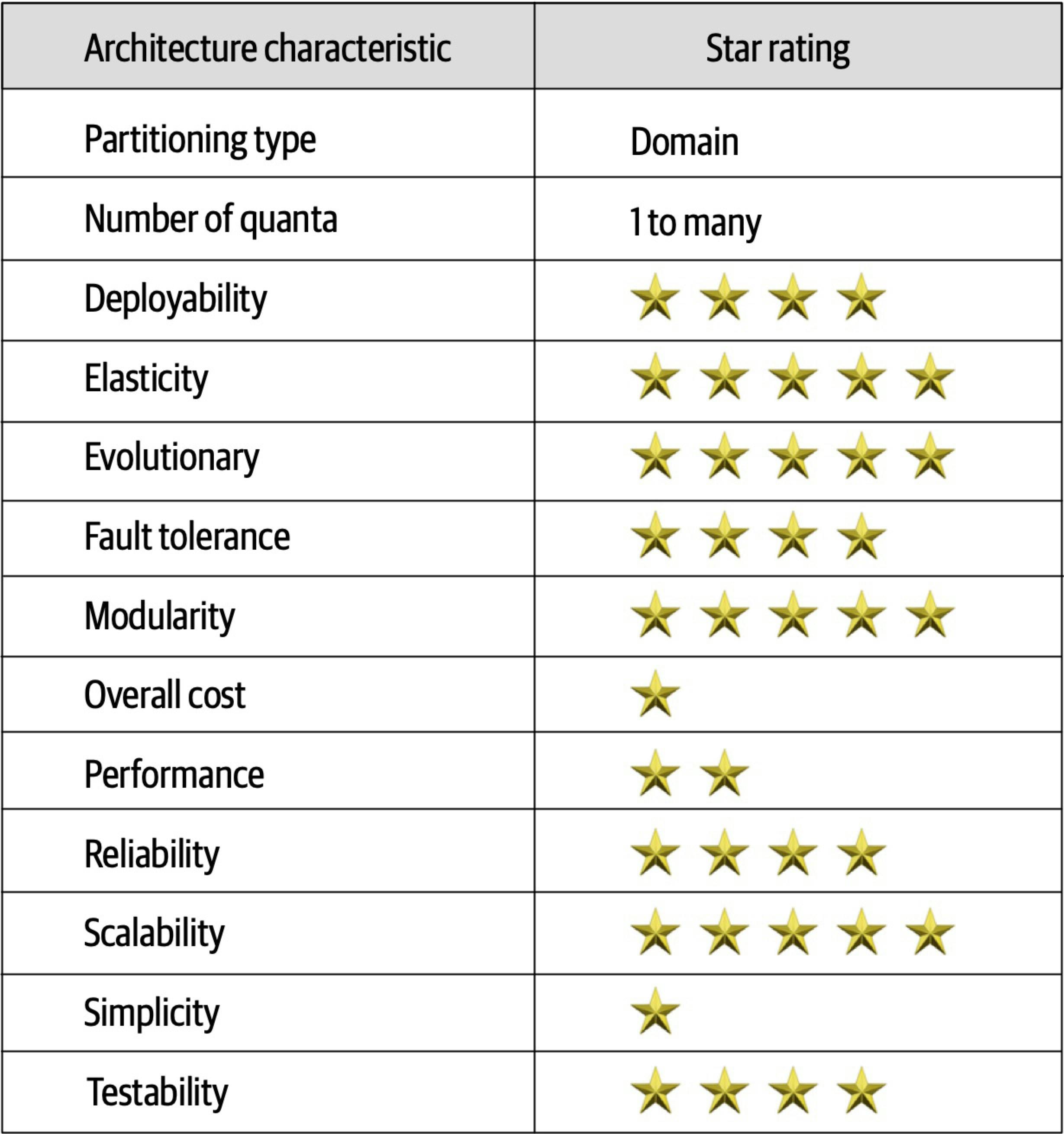

Microservices characteristics Image Source: Fundamentals of Software Architecture

Microservices characteristics Image Source: Fundamentals of Software Architecture

Can we address the performance requirement and ensure we decouple the systems ? Our choice was to do Event-Driven microservices. This meant that each module/service was fairly decoupled and can scale based on needs.

But the next question was deciding on the communication between microservices. Should we allow constant communication between microservices ?

We adhered to this principle

Microservice will call another microservice if it needs to some operation but will do replication if it only needs data

Should we do choreography or orchestration ?

Predominantly it was choreography following the event driven architecture with no central service. But Recommendation Service was dependent on search service for its use case. We will cover that in the next section while discussing architecture.

High Level Architecture(using AWS Stack)

Our ideation was over and the next step was to formulate the architecture.

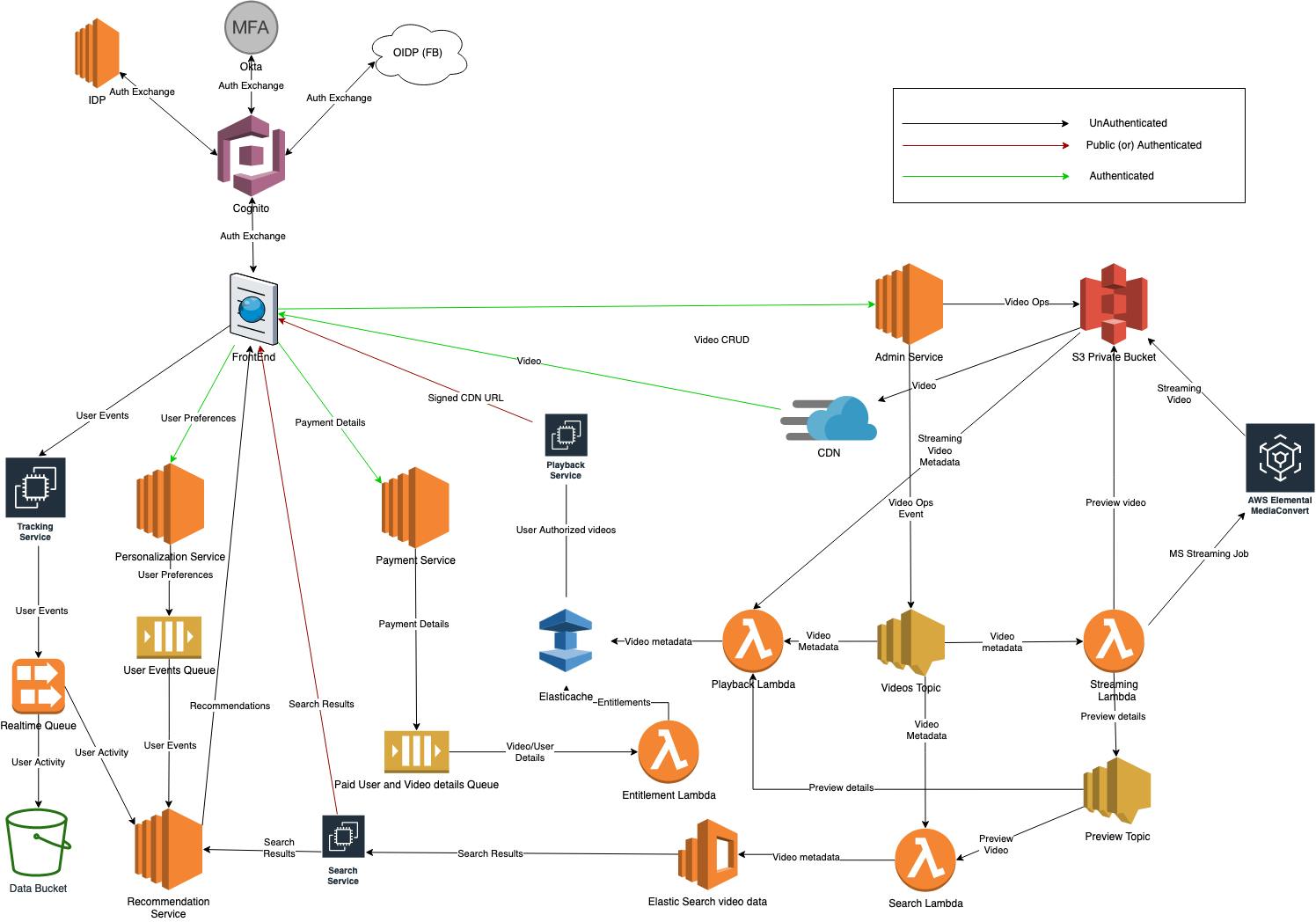

Architecture diagram

Architecture diagram

Authentication

Users needed to be authenticated in case of paid users and also we need authentication for Twers. We needed 3 types of Auth integrations

- Social/OIDP

- Okta

- Create an account only within the site

We found cognito to be a good fit for this purpose.

Admin Services

Authenticated TWers can only upload videos. For all user facing services, our idea was to use EKS instead of lambda . Lambda has the cold start problem but AWS is continuously pushing boundaries there.

Once Admin user uploads the video to S3, Admin Service emits the Video Uploaded event to a SNS topic. Admin Service emits events in case of video update and delete as well.

Each Service maintains its own database which is not explicitly mentioned in the diagram. Most of our services did not need relational capability and hence our choice was to go with DynamoDB.

Video Conversion

Once the video Topic, receives a video event it fans out to multiple consumers. The consumer we are going to discuss here is the media conversion service. We wanted to utilize the MS-SSTR format for videos. It provides good adaptive experience for end users.

We wanted to serve the videos via Cloudfront providing less end user latency. Considering the factors, video conversion lambda subscribed to the video topic generates a preview video first. Once this step is successful, Preview Video Created Event is triggered.

It then triggers AWS Elemental Media Conversion job to convert the video to MS-SSTR format. Once the conversion is done and video is uploaded to S3, triggers the Video Conversion Successful event.

Playback

This is the critical component of our design. This listens to all 3 events triggered so far.

The Service utilizes a Elasti cache(Redis) to store the information for quick retrieval. A Lambda is subscribed to the 3 topics and on each event updates the corresponding metadata of video(Video Title, Url etc,Preview Url and converted Url) in Redis.

Playback service consumes the information from Redis and provides the cloudfront url to an authenticated and authorized user.

Search

Search Lambda also listens to all 3 events and maintains the data in Elastic Search service. Elastic search is used to provide quick results in case of search queries like (tags, speaker, title etc.)

Search Service additionally classifies the visibility of videos for the user such as paid videos with only preview link for free users.

Personalization

This service provides personalization behaviour such as language for the site, choice of videos and other such details. These are maintained in Dynamo DB and events are also emitted for Recommendation Service. So far we have used SNS but here SQS is utilized. Two predominant reasons

- There is no fan out. Only one service is consuming

- Consumer is not a lambda and hence it is better to use polling to consume messages.

Payment

Service will integrate with a third party provider for payment but maintain the paid status of the video for user. This is stored in its internal database but also posts the Entitlement event to a SQS queue.

Behaviour Tracking

This is concerned with tracking user activity in the site. This will track activities like

- What is the user watching ?

- Does he watch the video in single go ?

- What type of videos user is searching ?

This will help to understand user behaviour and provide the user with valid recommendations. The tracking services forwards these events to Kinesis considering the huge load of events. From kinesis, it eventually lands into S3 for batch analytics and also to Recommender service for recommendations via Kinesis streams.

Recommender

This is the final piece of the puzzle. This accumulates the user activities from 3 different sources

- User Profile

- User Activity

- User Entitlement

This maintains a complete model of the user in its database to provide recommendations to the user. As the pages are loaded, it uses the user model and generates a search query to search service based on the parameters. Initially we plan to use content filtering. So we plan to extract out parameters from the user model and use search service to obtain recommendations.

Feedback

We presented the architecture diagram along with class and sequence diagrams to the Archwar panel. They provided us with invaluable feedback which I am outlining here.

- Good fault tolerant and decoupled architecture

- The architecture currently supports only recorded events. What about live events ?

- Good initial idea on data platform

- Raw videos are exposed in case of conversion job failure. Does it not affect reputation ? Would it not be better to show only converted videos with full confidence ?

We did not win the Archwar but we managed to get a special mention for our fault tolerant architecture. It was a good experience to participate in the Archwar and we learned a lot.

Ending with tip from Fundamentals of Software Architecture

Never shoot for the best architecture, but rather the least worst architecture.

Thanks for reading and I would love to hear your feedback on the article.